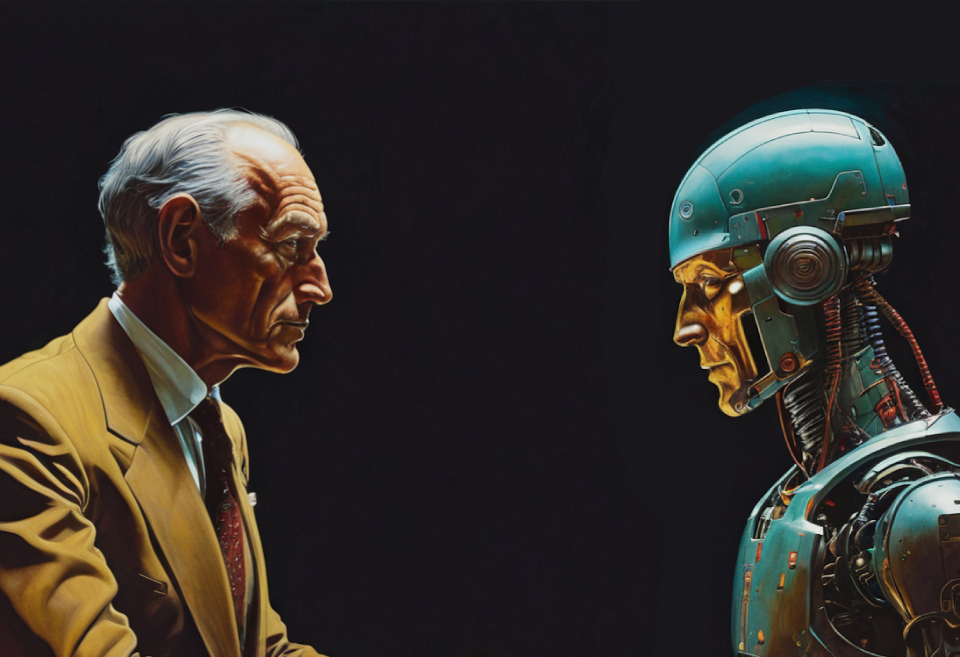

ChatGPT: A Hallucination or Revelation?

OpenAI's ChatGPT has captivated the world's attention with its ability to generate remarkably human-like text. However, behind the magic lies a more complex story of ChatGPT's reliability, capabilities, and the company behind it.

OpenAI's Chat Generative Pre-training Transformer (ChatGPT) model powering the chatbot service in the same name, captured the world's attention by generating remarkably human-like text. Behind the magic lies a more complex story of the large language model's reliability, capabilities, and the company behind it.

Hallucination and Degradation

When starting a conversation with ChatGPT, the initial responses are reassuring and informative. Yet, as the conversation progresses, ChatGPT may provide inaccurate information. This happens the most when asked about topics outside its training data. Tests have shown ChatGPT faces difficulties with basic math and sometimes creates fabricated interview quotes.

This hallucinatory tendency plagues all large language models like ChatGPT. They are trained purely on correlations in vast text datasets. So they are skilled at producing believable responses, but manipulate facts and logic to fit their narrative, all to meet the directive of answering users' questions. These concerns have prompted some universities to take action, with bans on the use of ChatGPT in an effort to combat plagiarism and ensure academic integrity. Scientists and researchers have also voiced their concerns regarding its usage on research. Even OpenAI has warned of relying ChatGPT outputs for health, legal and financial advice.

Some even suggest ChatGPT's basic capabilities have degraded over time as tweaks by OpenAI to improve safety and coherence have reduced its reasoning skills.

For OpenAI, perceptions of ChatGPT's competence carry high stakes. The company's reputation hinges on delivering ever-smarter AI to fulfil its mission of developing artificial general intelligence. ChatGPT is the public face of its progress. Over-promising risks undermining public trust.

Read more on reducing hallucination below:

The Company and Competition

OpenAI began in 2015 as a nonprofit research lab to develop AI safely for humanity's benefit. In 2019 it created a for-profit arm to access sufficient financing, notably $13 billion from Microsoft in exchange for exclusive licensing rights. This shift has fuelled criticism that OpenAI has drifted from its lofty ideals towards commercial imperatives.

OpenAI's alliance with Microsoft gives it an unparalleled war chest to pursue AGI. Although this exclusive partnership restricts its options, it gives Microsoft huge sway and creates potential conflicts of interest. Microsoft benefits enormously even without direct shares in OpenAI's profits. It gains an AI innovator, secures Azure's position as the dominant cloud for AI computing and integrates OpenAI products across its offerings.

Nonetheless, OpenAI maintains its mission is developing artificial general intelligence (AGI) then ensuring it is safe. This focus on AGI differentiates it from Big Tech competitors chasing profits. However, OpenAI increasingly resembles a hot startup needing regular product releases to satisfy investors, despite its nonprofit roots.

In the quest to develop powerful AI models, OpenAI is not alone. Several competitors are also vying to offer alternative solutions and push the boundaries of AI capabilities.

Some of OpenAI's talent has left to form rivals. Anthropic, founded by ex-OpenAI researchers, offers Claude, a chatbot focused on safe, helpful conversations. Its non-profit mission is aligned with OpenAI's original ethics. Inflection AI's recently launched Pi chatbot also targets kindness and empathy elements.

Claude by Anthropic: Focus on Safety

Rival Anthropic adopts a different stance with its Claude chatbot. While similarly impressive conversing with users, it cautions against overestimating its skills. "Claude cannot perform many basic tasks a human can," its website admits plainly. Anthropic stresses avoiding harmful misinformation. This positions Claude as a useful assistant, not human-like AI.

Pi by Inflection AI: Prioritising Empathy

Inflection AI, founded by former DeepMind co-founder Mustafa Suleyman, launched a chatbot called Pi, marketed as a "kind and supportive" conversational AI. Pi aims for greater empathy, adapting its supportive conversations to each user. While currently less capable than ChatGPT, Pi's ethical focus resonates given concerns over OpenAI's direction.

AGI... Are We There Yet?

OpenAI originated with the ambitious mission to develop artificial general intelligence (AGI) for humanity's benefit. However, its shift towards a capitalist unicorn conflicts with that non-profit ethos. Meanwhile, claims that ChatGPT grows ever-smarter are challenged by its fluctuating performance over time.

This unpredictability highlights the difficulty of achieving true AGI. We cannot build reliable systems while our AI models remain black boxes, their behaviors shifting in opaque ways. Current techniques like ChatGPT may impress, but still lack robust understanding of the world.

OpenAI itself warns against relying on its technology for critical purposes. The public's receptiveness exposes our vulnerability to superficially credible fictions. Developing real AGI requires penetrating this illusion to reckon with hard truths - these tools remain primitive mimics of intelligence. Their value lies in revealing how far we are from replicating the complexity of human cognition.

Is It The Truth or A Hallucination?

ChatGPT and other large language models is a technological leap forward with immense potential. AI's human-like hallucinations become harder to distinguish from real intelligence as generative models become more fluent.

The revelation of ChatGPT is illuminating the gap between artificial and human cognition. To bridge the gap, we need to recognize the flaws and demand greater human-like meaning, logic, and knowledge in AI responses. This is the challenge that companies like OpenAI must overcome to develop AI that brings us closer to the truth.